SCREAMING Frog mother fuckers!

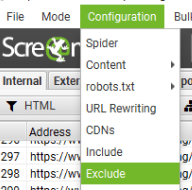

To exclude URLs just go to:

Configuration > Exclude (in the very top menu bar)

To exclude URLs within a specific folder, use the following regex:

^https://www.mydomain.com/customer/account/.*

^https://www.mycomain.com/checkout/cart/.*

The above regex, will stop Screaming Frog from Crawling the customer/account folder and the cart folder.

Or – this is easier for me, as I have to check and crawl lots of international domains with the same site structure and folders:

^https?://[^/]+/customer/account/.*

^https?://[^/]+/checkout/cart/.*

Excluding Images –

Ive just been using the image extensions to block them in the crawl, e.g.

.*jpg

Although you can block them in the Configuration>Spider menu too.

Excluding Parameter URLs

this appears to do the job:

^.*\?.*My typical “Excludes” looks like this:

^https?://[^/]+/customer/account/.*

^https?://[^/]+/checkout/cart/.*

^.*\?.*jpg$

png$

.js$

.css$

Update – you can just use this to block any URLs containing “cart” or “account”

/account/|/cart/

Update:

Currently using this for my excludes config, as I actually want to crawl images:

^https?://[^/]+/customer/account/.*

^https?://[^/]+/checkout/cart/.*

^.\?.

.js$

.css$

- You can just exclude crawling JS and CSS in the crawl > Configuration but I find it slightly quicker this way

- If you are using JS rendering to crawl, you might want to crawl JS files too. Depending on if they’re required to follow any JS links etc (generally bad idea to have JS links, if you do, have a HTML backup or prerendering in place)